A multi-policy hyper heuristic for multiobjective optimization

A poster for the GECCO23 Conference, July 15-19th, 2023, Lisbon

Selection hyper-heuristics (SSHH) are search strategies that can be successfully applied to multi-objective optimization (MOO) problems. They showed resilient results to different types of optimization problems, which may come at the cost of a longer resolution time than other metaheuristics. Learning a single selection rule in MOO might be limiting because the information from the multiple objectives might be unexploited. A novel approach named the multi-policy approach is proposed and discussed to further enhance the searching ability of sequence-based selection hyper-heuristics, together with an ad-hoc learning rule. The availability of a set of problem-specific low-level heuristics is assumed and the resolution of a specific class of combinatorial problems, i.e., vehicle routing problems (VRPs), is considered for a numerical example. The multi-policy approach showed the ability to learn different selection rules for each objective and to change them during the execution of the algorithm, i.e., when solutions are getting closer to the Pareto front. The availability of parallel computation can speed up the calculation since the methodology is suitable for parallelization. The proposed methodology was found to produce production-ready solutions and the approach is expected to be successfully extended to problems different from the VRP.

Motivations

- Selection hyper-heuristics (SSHH) are search strategies that can be successfully applied to multiobjective optimization problems

. - Learning a single selection rule in a SSHH might be limiting because the information from the multiple objectives might be unexploited

. - Algorithms based on sequences of problem-specific low-level heuristics can efficiently solve complex combinatorial problem

.

Research goals

- A novel approach named the multi-policy approach is proposed to further enhance the searching ability of sequence-based selection hyper-heuristics.

- The multi-policy approach performs online learning of the select policies. One selection policy per objective is learned using objective-wise information.

- The proposed algorithm is tested on a real-world instance of the vehicle routing problem with pickup and delivery (VRPPD).

Methodology

A low-level heuristic (LLH) is a rule that modifies the decision variables \(\mathbf{z}\) of the problem under analysis. A set \(H\) of \(m\) LLHs is assumed to be available to modify the solutions \(\mathbf{z} \in Pop\).

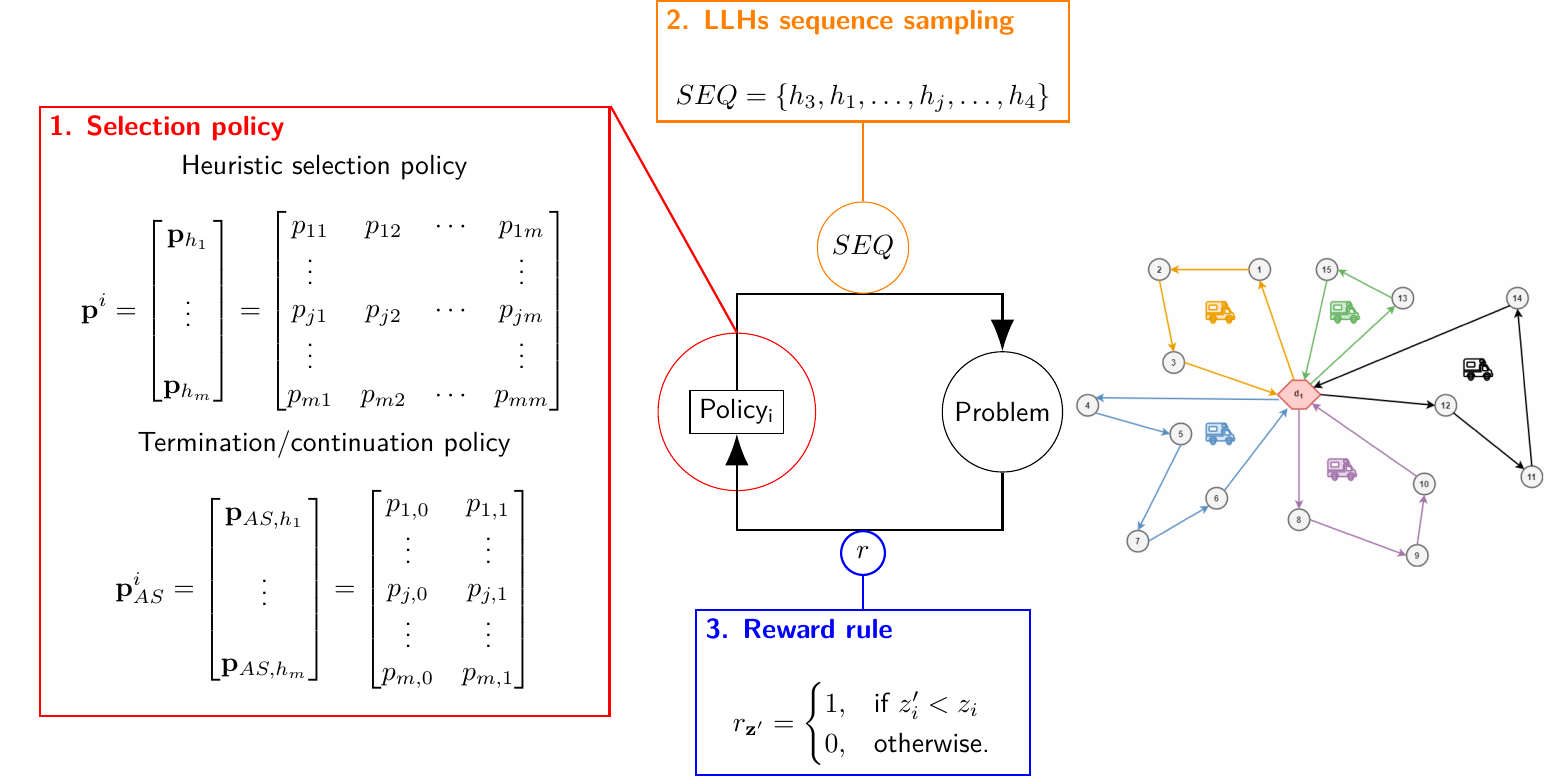

A selection policy is an ensemble of Markov decision models that alternates the selection of LLHs \(h\) and sequence-termination signals \(AS\) (see box 1 in Figure 1). There are as many selection policies as the number $N$ of objectives.

At each iteration, a selection policy produces a sequence of low-level heuristics \(SEQ\) (see box 2 in Figure 1) to be applied to a solution \(\mathbf{z}\).

For each new solution \(\mathbf{z'} \in Pop'\) that was improved by a sequence of heuristics \(SEQ\), the reward rule (see box 3 in Figure 1) attributes a score to all the couples of subsequent heuristics in \(SEQ\).

Algorithm architecture

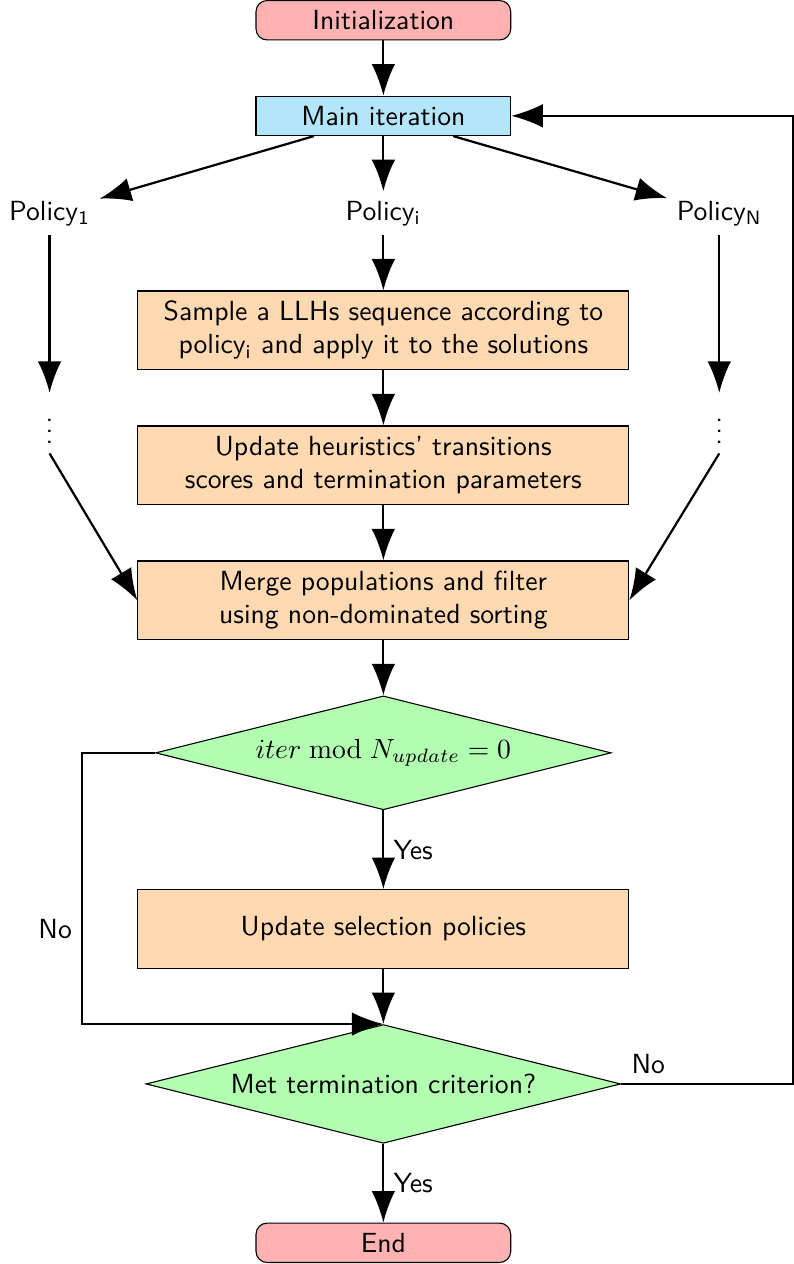

The multi-policy hyper heuristic foresees an initiation phase followed by the main loop. The main loop is executed separately for each policy, which makes the procedure suitable to be parallelized. The termination criterion chosen for the poster was the number of iterations; time and convergence of a performance metric can be used as well.

As shown in Figure 2, the main loop is followed by the update of selection policies and a check for the termination condition.

Experimental setting

Numerical experiments were carried out to solve a three-objective version of the vehicle routing problem with pickup and delivery (VRPPD).

The multi-policy algorithm was tested on a single instance of the VRPPD with 60 deliveries and 4 pickup points. Figure 3 shows an example of the Pareto front obtained by the multi-policy algorithm.

Data are inspired to a real-world case study: a geography with non-Euclidean distances was used, and goods to be delivered presented different weights and volumes.

Selected results

- Selection policies evolve over time: for example, comparing \(\mathbf{p}^{(eco)}\) at iteration 25 and 50 in Figure 4, the selection probability distribution \(p_{h_4}\) changes significantly.

- Selection policies evolve differently from each other: for instance, \(\mathbf{p}^{(eco)}\) and \(\mathbf{p}^{(env)}\) at iteration 50 in Figure 4 and 5 present significantly different values for \(p_{h_0}\) and \(p_{h_1}\).

- The learned policies are sensitive to: (1) the reward value \(r\) that is used, (2) the number of iterations between policy updates \(N_{update}\), and (3) the weight that is used when updating the probabilities in the Markov decision model.

Conclusions and future research

- Learning selection policies is not straightforward. Extensive testing of the learning rule is required over a large and diverse set of instances of the problem studied.

- Online learning in multiobjective combinatorial problems is time expensive. Either a high-performance implementation of the algorithm exists, or offline learning should be considered.

- Comparative analysis of the proposed multi-policy hyper heuristic is required. Algorithms such as multiobjective local search (MOLS) and choice function hyper heuristic (CFHH) will be considered for testing over large (e.g., up to 500 nodes) and diverse (e.g. geography, load, time windows) problem instances.

- Sampling of LLH sequences yield widely variable execution times when the termination criterion is the number of iterations, which might be undesirable in the production phase.